Felix Klein’s Erlangen Programme of 1872 introduced a methodology to unify non-Euclidean geometries. Similarly, geometric deep learning (GDL) constitutes a unifying framework for various neural network architectures. GDL is built from the first principles of geometry—symmetry and scale separation—and enables tractable learning in high dimensions. Symmetries play a vital role in preserving structural information of geometric data and allow models (i.e., neural networks) to adjust to different geometric transformations.\n\nIn this context, spheres exhibit a maximal set of symmetries compared to other geometric entities in Euclidean space. The orthogonal group O(n) fully encapsulates the symmetry structure of an nD sphere, including both rotational and reflection symmetries. In this thesis, we focus on integrating these symmetries into a model as an inductive bias, which is a crucial requirement for addressing problems in 3D vision as well as in natural sciences and their related applications.\n\nIn Paper A, we focus on 3D geometry and use the symmetries of spheres as geometric entities to construct neurons with spherical decision surfaces—spherical neurons—using a conformal embedding of Euclidean space. We also demonstrate that spherical neuron activations are non-linear due to the inherent non-linearity of the input embedding, and thus, do not necessarily require an activation function. In addition, we show graphically, theoretically, and experimentally that spherical neuron activations are isometries in Euclidean space, which is a prerequisite for the equivariance contributions of our subsequent work.\n\nIn Paper B, we closely examine the isometry property of the spherical neurons in the context of equivariance under 3D rotations (i.e., SO(3)-equivariance). Focusing on 3D in this work and based on a minimal set of four spherical neurons (one learned spherical decision surface and three copies), the centers of which are rotated into the corresponding vertices of a regular tetrahedron, we construct a spherical filter bank. We call it a steerable 3D spherical neuron because, as we verify later, it constitutes a steerable filter. Finally, we derive a 3D steerability constraint for a spherical neuron (i.e., a single spherical decision surface).\n\nIn Paper C, we present a learnable point-cloud descriptor invariant under 3D rotations and reflections, i.e., the O(3) actions, utilizing the steerable 3D spherical neurons we introduced previously, as well as vector neurons from related work. Specifically, we propose an embedding of the 3D steerable neurons into 4D vector neurons, which leverages end-to-end training of the model. The resulting model, termed TetraSphere, sets a new state-of-the-art performance classifying randomly rotated real-world object scans. Thus, our results reveal the practical value of steerable 3D spherical neurons for learning in 3D Euclidean space.\n\nIn Paper D, we generalize to nD the concepts we previously established in 3D, and propose O(n)-equivariant neurons with spherical decision surfaces, which we call Deep Equivariant Hyper-spheres. We demonstrate how to combine them in a network that directly operates on the basis of the input points and propose an invariant operator based on the relation between two points and a sphere, which as we show, turns out to be a Gram matrix.\n\nIn summary, this thesis introduces techniques based on spherical neurons that enhance the GDL framework, with a specific focus on equivariant and invariant learning on point sets.

Sep 27, 2024

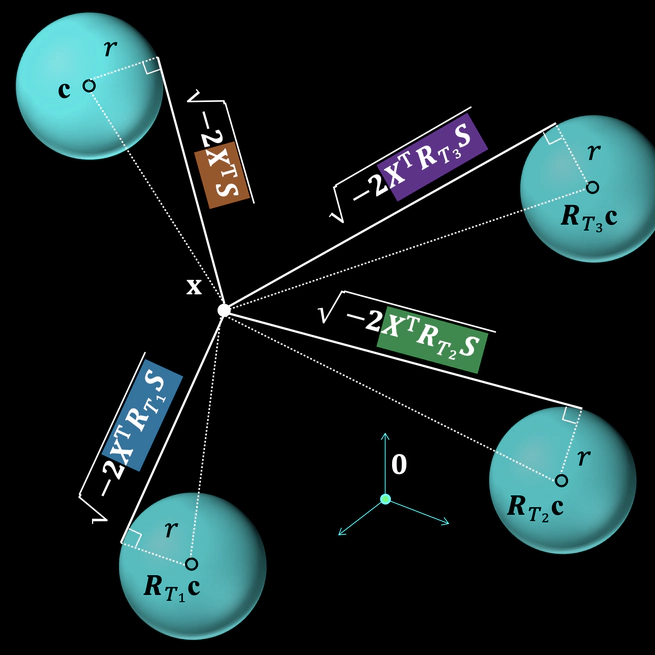

In this paper, we utilize hyperspheres and regular $n$-simplexes and propose an approach to learning deep features equivariant under the transformations of $n$D reflections and rotations, encompassed by the powerful group of $\text{O}(n)$. Namely, we propose $\text{O}(n)$-equivariant neurons with spherical decision surfaces that generalize to any dimension $n$, which we call Deep Equivariant Hyperspheres. We demonstrate how to combine them in a network that directly operates on the basis of the input points and propose an invariant operator based on the relation between two points and a sphere, which as we show, turns out to be a Gram matrix. Using synthetic and real-world data in $n$D, we experimentally verify our theoretical contributions and find that our approach is superior to the competing methods for $\text{O}(n)$-equivariant benchmark datasets (classification and regression), demonstrating a favorable speed/performance trade-off.

Jul 22, 2024

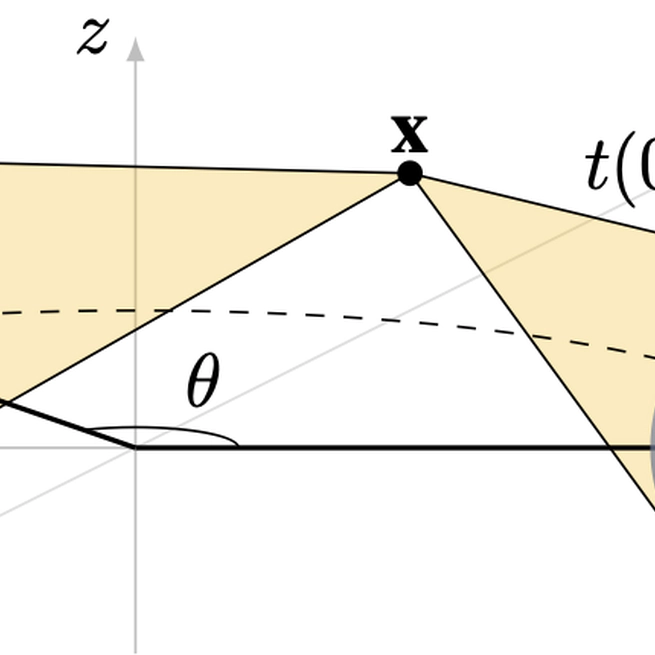

Emerging from low-level vision theory, steerable filters found their counterpart in prior work on steerable convolutional neural networks equivariant to rigid transformations. In our work, we propose a steerable feed-forward learning-based approach that consists of neurons with spherical decision surfaces and operates on point clouds. Such spherical neurons are obtained by conformal embedding of Euclidean space and have recently been revisited in the context of learning representations of point sets. Focusing on 3D geometry, we exploit the isometry property of spherical neurons and derive a 3D steerability constraint. After training spherical neurons to classify point clouds in a canonical orientation, we use a tetrahedron basis to quadruplicate the neurons and construct rotation-equivariant spherical filter banks. We then apply the derived constraint to interpolate the filter bank outputs and, thus, obtain a rotation-invariant network. Finally, we use a synthetic point set and real-world 3D skeleton data to verify our theoretical findings.

Jul 19, 2022

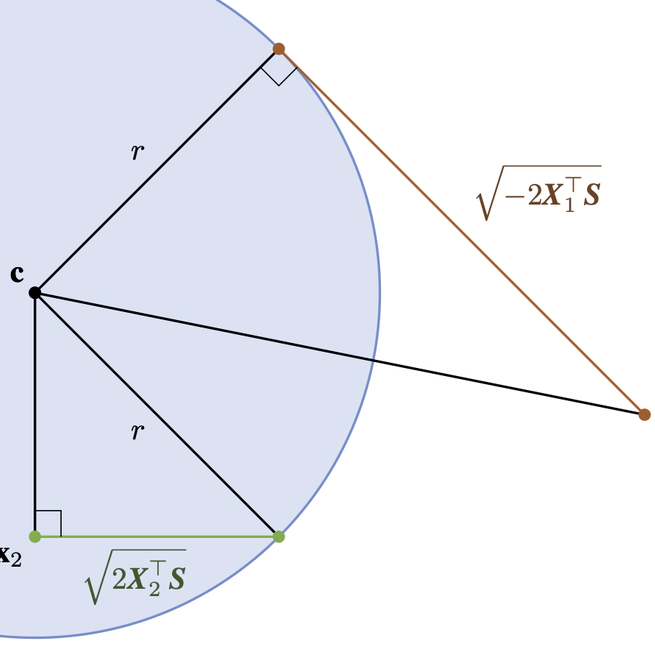

Solving geometric tasks involving point clouds by using machine learning is a challenging problem. Standard feed-forward neural networks combine linear or, if the bias parameter is included, affine layers and activation functions. Their geometric modeling is limited, which motivated the prior work introducing the multilayer hypersphere perceptron (MLHP). Its constituent part, i.e., the hypersphere neuron, is obtained by applying a conformal embedding of Euclidean space. By virtue of Clifford algebra, it can be implemented as the Cartesian dot product of inputs and weights. If the embedding is applied in a manner consistent with the dimensionality of the input space geometry, the decision surfaces of the model units become combinations of hyperspheres and make the decision-making process geometrically interpretable for humans. Our extension of the MLHP model, the multilayer geometric perceptron (MLGP), and its respective layer units, i.e., geometric neurons, are consistent with the 3D geometry and provide a geometric handle of the learned coefficients. In particular, the geometric neuron activations are isometric in 3D, which is necessary for rotation and translation equivariance. When classifying the 3D Tetris shapes, we quantitatively show that our model requires no activation function in the hidden layers other than the embedding to outperform the vanilla multilayer perceptron. In the presence of noise in the data, our model is also superior to the MLHP.

Sep 21, 2021